In recent years, the conversation about artificial intelligence and employment has been dominated by a substitution narrative: Which jobs will disappear? How many jobs will be replaced by algorithms? While this question is important, it has led us to view the future of work from a narrow perspective. Isabella Loaiza, a postdoctoral researcher at the MIT Sloan School of Management, recently presented a different perspective at the Center for Analytics for Public Policy (CAPP): instead of asking which jobs will disappear, we should be asking how humans and machines can best complement each other.

What makes this research particularly relevant is that it’s not just a philosophical difference. The issue is methodological. The metrics that currently dominate the debate on AI and employment are, almost by design, biased toward automation.

The most influential metric in this field is "exposure," which measures how vulnerable a task is to being impacted by artificial intelligence. It sounds reasonable, but it has a fundamental problem: it doesn’t distinguish between automation (when AI replaces humans) and augmentation (when AI complements human capabilities).

This limitation matters because existing metrics rarely account for the fact that tasks within an occupation are interconnected. A lawyer doesn’t just review contracts or present arguments before a judge in isolation; these tasks are intertwined, complement each other, and enhance one another. And it is precisely in those interconnections where the true potential of human–machine collaboration emerges.

To develop a more comprehensive metric, Loaiza and her team began with a fundamental question: What are the human capabilities that artificial intelligence cannot easily replicate? From their interviews with over 400 professionals across various fields, they identified five critical limitations of AI:

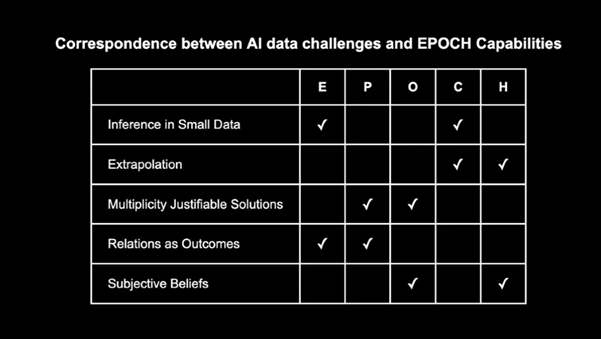

Based on these five limitations, the research team distilled five groups of distinctive human capabilities, creating the acronym EPOCH:

E – Empathy and Emotional Intelligence: Genuine compassion: feeling with another. Language models can generate responses that seem empathetic, but they lack true understanding rooted in the shared experience of being human.

P – Presence: The quality of being in the same physical space. COVID showed us, in dramatic fashion, which aspects of work and life are truly irreplaceable when it comes to being present in person.

O – Opinion and Ethical Judgment: Critical thinking, causal reasoning, and intuition. The ability to question assumptions, see non-obvious connections, and make nuanced judgments in complex contexts.

C – Creativity, Curiosity, and Imagination: LLMs can generate content that surpasses the average human—but not the best humans in their respective creative fields.

H – Hope, Vision, and Leadership: The ability to act even when the chances of success are minimal. To imagine a radically different future and mobilize others toward it.

With the EPOCH capabilities defined, the team faced a methodological challenge: how do you measure these capabilities across the thousands of tasks that make up the labor market? They used the O*NET database, which catalogs 19,000 tasks distributed across 900 occupations in the U.S. labor market.

Here’s where the innovation comes in: they employed an embedding model (derived from BERT) to measure the semantic similarity between each task and the five EPOCH capabilities. This allowed them to classify each task based on its alignment with these distinctive human abilities. Yes, using a language model like BERT introduces some methodological opacity, but it also enables analysis at a scale that would be impossible to perform manually.

But the real conceptual leap came afterward. Instead of analyzing tasks in isolation, they created task networks to capture their complementarity within each occupation. The logic is elegant: if two tasks are interconnected within a profession, and one has a high EPOCH score (highly human) while the other has a low EPOCH score (easily automatable), then there’s high augmentation potential. In that scenario, humans and machines can distribute the work or collaborate to solve both tasks more effectively.

In contrast, if both tasks have high EPOCH scores, they are likely resistant to automation. And if both have low EPOCH scores, they may both be fully automatable.

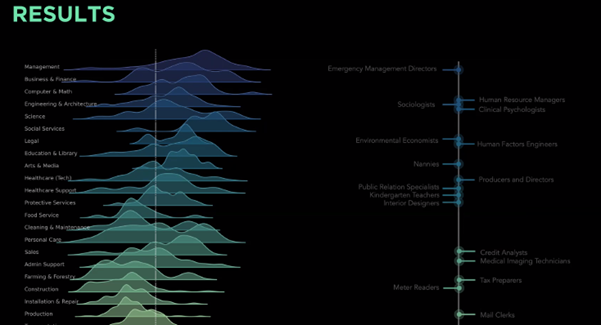

The results from applying this methodology revealed interesting patterns that the traditional “exposure” metric fails to capture.

The occupations with the highest EPOCH scores turned out to be management and nursing. These professions are at low risk of automation but—surprisingly—also show low augmentation potential as currently measured. Why? Because the augmentation measured here is based on the complementarity between high- and low-EPOCH tasks. When nearly all of your tasks require deeply human capabilities, there’s less room to delegate components to AI.

But this highlights an important limitation: this measure of augmentation only captures task-based collaboration—that is, the distribution of existing tasks. It does not capture what we might call expansive augmentation: the ability to do things that were previously impossible. Before the microscope, seeing microorganisms wasn’t just difficult—it was inconceivable. This dimension of augmentation is still something we don’t know how to measure adequately.

The most fascinating case was the legal profession. These occupations displayed bimodal EPOCH distributions: one cluster of tasks with very low EPOCH scores (bureaucratic work, standard document review, precedent research), and another cluster with very high EPOCH scores (arguing before judges, complex negotiation, strategic advising requiring ethical judgment). This bipartite structure signals enormous augmentation potential: AI can take over low-EPOCH work, freeing professionals to focus on the dimensions that require genuinely human capabilities.

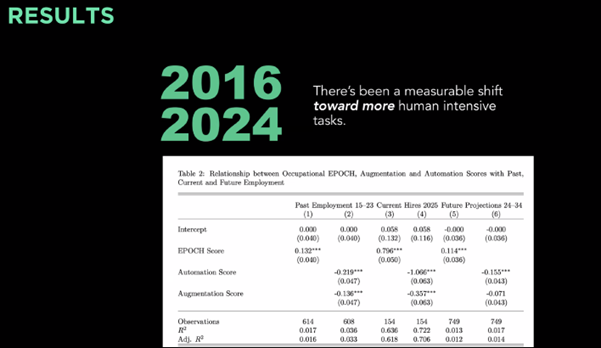

Perhaps the most hopeful finding emerges from comparing data between 2016 and 2024. Contrary to fears that automation is dehumanizing work, the researchers found a measurable shift toward more human tasks—those more intensive in EPOCH capabilities.

The new tasks that appeared in the dataset between 2016 and 2024 had, on average, higher EPOCH scores than the tasks that were removed. High-EPOCH tasks increased in frequency. And when they analyzed employment growth, they found a positive correlation with the EPOCH score: occupations rich in distinctive human capabilities are growing.

The augmentation score also showed a positive correlation with employment growth, though weaker than that of EPOCH. In contrast, the automation score showed the opposite pattern: a negative correlation with employment growth.

What this research shows us is that the wrong questions we’ve been asking are biased. Instead of obsessing over which jobs will disappear, we should be asking how we can design better synergies between humans and machines. Instead of assuming AI will replace us, we should identify where it can free us to do what we do best: empathize, create, judge with values, build relationships, and imagine alternative futures.

Metrics matter because they shape our policies, our investments in education, and our decisions about which skills to cultivate. If our metrics only capture the risk of automation, our policies will focus on defense and resistance. But if our metrics also capture the potential for augmentation, our policies can focus on the deliberate design of better human–machine collaborations.

This research does not deny that AI will profoundly transform the world of work. But it offers us a more complete vision for understanding that transformation. And it reminds us that the future of work is not predetermined by technology—it will be shaped by the questions we ask, the metrics we build, and the complementarities we choose to design.

Get information about Data Science, Artificial Intelligence, Machine Learning and more.

References

Loaiza, Isabella and Rigobon, Roberto, The EPOCH of AI: Human-Machine Complementarities at Work (November 21, 2024). MIT Sloan Research Paper No. 7236-24, Available at SSRN:https://ssrn.com/abstract=5028371 or http://dx.doi.org/10.2139/ssrn.5028371

In the Blog articles, you will find the latest news, publications, studies and articles of current interest.