We still remember how in the 1987 movie Robocop the policeman had a visor that allowed him to identify people's identities through their faces. Well, a little more than 20 years later and in a much less intimidating way, several countries around the world already have integrated camera systems with facial recognition that support the different police departments in detecting and fighting crime. However, what is only just evolving is the ethical implications regarding privacy, misuse of images and potential biases that can be replicated by AI.

There are several ways in which facial recognition is currently supporting the police in the fight against crime. In Spain, a system called ABIS (automatic biometric identification system) was developed using an algorithm called "Cogent Face Recognition Platform (FRP)" developed by the French company THALES that allows identifying an individual based on any type of image of his or her face (El País, 2022). Similarly, in a report presented by Amnesty International from 2017 to 2021, the New York police department used facial recognition to track suspects in 22,000 cases, using an integrated system of more than 15,000 cameras (2021). The use of this technology is even reported in Colombia. In Cúcuta, in 2022, a pilot facial recognition scheme was developed that cross-referenced information from the National Police's detainee databases (Semana, 2022). In 2017 Envigado invested more than 2700 million pesos in cameras with facial recognition software and since 2015 cities such as Bogota, Cali, Cartagena, and Barranquilla also have this technology.

However, these developments raise real questions about privacy, algorithmic ethics, and citizens' rights. Probably, the most canonical effort corresponds to the #BanTheScan initiative developed by Amnesty International; an organization that "after the explosion of certain racist performances" where cameras with facial recognition were used to identify and process individuals as a protest during the Black Lives Matter movement, established a campaign with more than 5,000 volunteers and 18,000 hours of work to document the location of AI cameras in New York and offer/demand transparency in the use of this technology. But, it is important to ask: Why is transparency in these systems important? What problems can a technology that intends to recognize criminals bring? Who owes nothing, fears nothing?

Ethical issues in crime

Algorithmic ethics has been increasing its importance with the growing use of artificial intelligence for decision-making. The learning models that have shown the best technical performance in recent years are those known as "black box" models, within which we can find deep neural networks and random forests. The name assigned to this category of models is not coincidental; they seem to produce impressive results, but few people understand how they do it. Explaining why a neural network predicted that an image corresponded to a dog and not a cat is a Herculean task, but possibly that is a correct answer. When classifying images, the interpretability of the model does not seem to be a big problem, but when using models to determine whether or not to grant credit, to predict whether a criminal will re-offend, or whether or not a person is suitable for a job, it is crucial to understand the "how" of that decision making.

Lack of interpretability can hide underlying problems in the models, such as biases. If, in determining whether or not a person is suitable for a job vacancy, a resume is given a low score simply because it corresponds to a woman, biases are being incurred. These biases stem from data and, therefore, from historical prejudices that have characterized humanity in the past. If we do not wish to continue perpetuating these same biases, we must prevent the models we use from reproducing them.

The problems of biases in crime models have been studied for some time. In particular, multiple biases have been detected in recidivism models. In 2019, a comparative study of different ways of assigning recidivism probability values for young criminals in Catalonia was carried out. Comparatively, the trained learning models show superior technical performance to the previously used methodology, which consisted of a form that young people had to complete. However, these models still show discrimination by gender and ethnicity. The exclusive use of these models based on better technical performance could lead to biases and disadvantage certain population groups due to characteristics that are not related to their criminal behaviors. Particularly well known, the COMPAS tool has been used throughout the United States to help judges make bail decisions by predicting defendants' risk of criminal recidivism. Several studies have shown that this tool is biased and disadvantages certain population groups, primarily people under the age of 25 and African Americans.

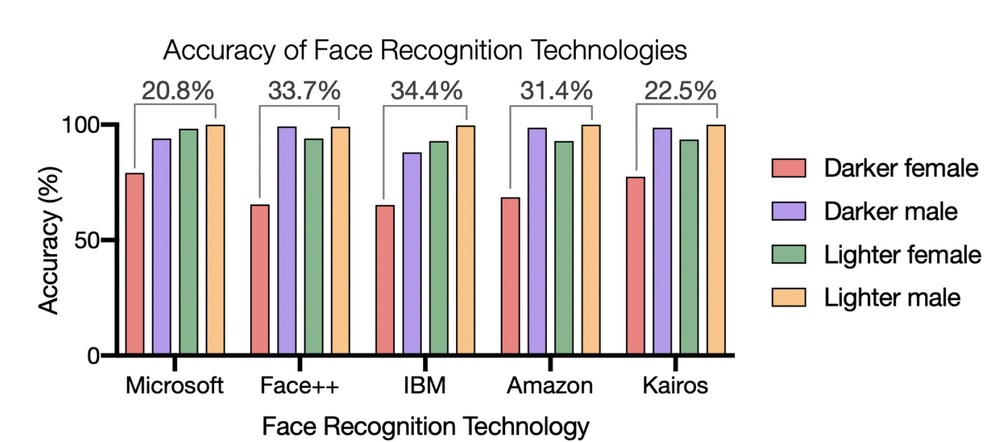

On the other hand, it is known that facial recognition algorithms have mostly shown performance disparities between people of different genders and races. Since 2018, they have been studying the impact this may have. Artificial intelligence algorithms learn from the data they are trained on. If 80% of the training images correspond to white people, the algorithm will likely perform better in this population group. The problems of facial recognition go beyond the technological barrier that could be provided, for example, by facial recognition on a device. When these algorithms are combined with security policies, the damage can be enormous. In 2020, Harvard University analyzed the potential impact on society of having performance differences in algorithms for certain population groups (Figure 1).

"Continued surveillance induces fear and psychological harm, leaving subjects vulnerable to targeted abuse, as well as physical harm, by expanding systems of government oversight used to deny access to health care and social welfare. In a criminal justice setting, facial recognition technologies that are inherently biased in their accuracy can misidentify suspects, imprisoning innocent African Americans." (Harvard University, 2020).

Figure 1. Harvard University. Racial Discrimination in Face Recognition Technology. Blog, Science Policy, Special Edition: Science policy and social justice. (2020).

These findings on crime models lead us to reflect on the facial recognition algorithms used in our cities. How can we ensure that the models currently employed by the police do not incur biases? Is it possible to trust these algorithms? What measures have been used to mitigate biases and avoid perpetuating stereotypes?

S. Tolan, M. Miron, E. Gómez, C. Castillo. Why Machine Learning May Lead to Unfairness: Evidence from Risk Assessment for Juvenile Justice in Catalonia (2019)

Get information about Data Science, Artificial Intelligence, Machine Learning and more.

In the Blog articles, you will find the latest news, publications, studies and articles of current interest.